Computer Vision Pipeline Benchmarking

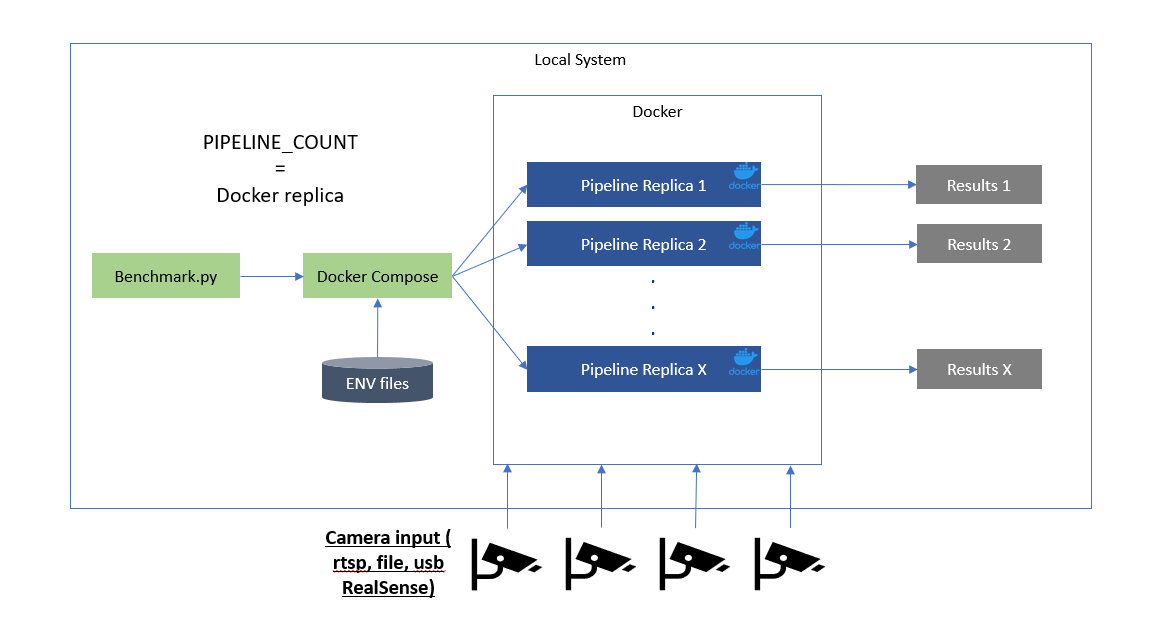

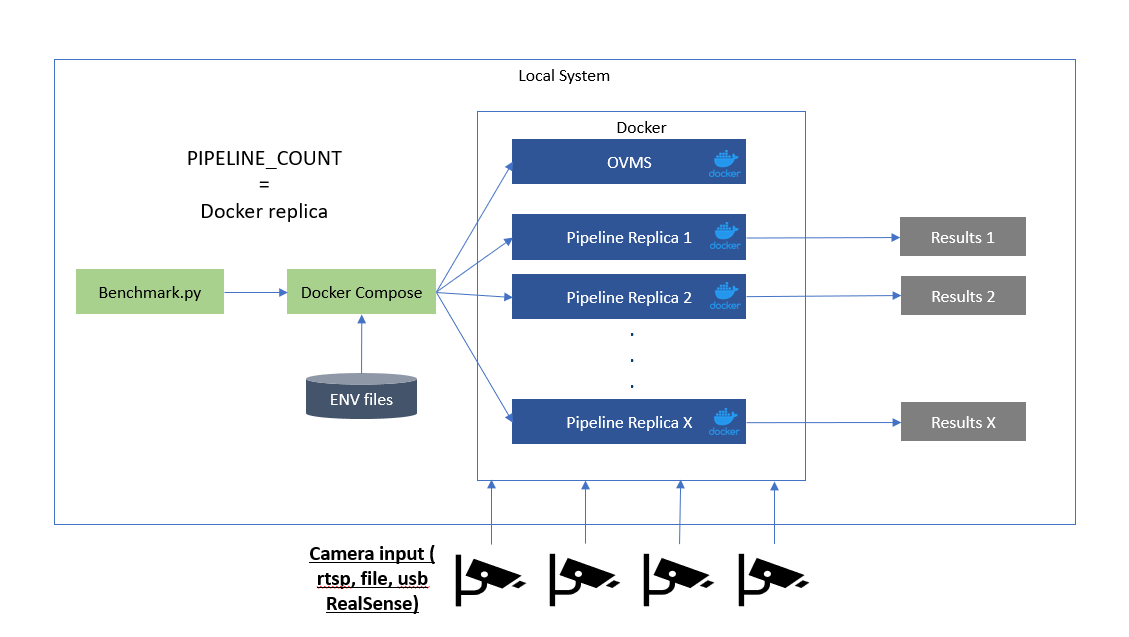

The provided Python-based script works with Docker Compose to get pipeline performance metrics like video processing in frames-per-second (FPS), memory usage, power consumption, and so on.

Prerequisites

- Docker

- Docker Compose

- Make

- Git

-

Code from Retail Use Cases Repo and its submodule Performance Tools Repo

Note

To install the submodule, run

make update-submodulesfrom the root of the retail-use-cases repo. -

Python environment v3.12.2

Note

This could be accomplished using Miniconda and creating a Python 3.12.2 env

sudo apt install git gcc python3-venv python3-dev

Benchmark a CV Pipeline

-

Build the benchmark container and change into the benchmark-scripts directory.

cd performance-tools/ make build-benchmark-docker -

Python packages listed in performance-tools/benchmark-scripts/requirements.txt

cd performance-tools/benchmark-scripts/ python3 -m venv venv source venv/bin/activate pip install -r requirements.txt -

[Optional] If NPU data collection is desired, ensure that the following is correct.

a. Run the following command to get the correct path to the NPU under

/sys/devicesb. Ensure the environment variable NPU_PATH in performance-tools/docker/docker-compose.yaml for the npu-util service or the global variable in performance-tools/docker/npu-util/npu_logger.py is set to the correct location.lspci | grep -i npuNPU_PATH="/sys/devices/pci0000:00/0000:<insert_results>/npu_busy_time_us"Example

If the lspci command is:

$ lspci | grep -i npu 00:0b.0 Processing accelerators: Intel Corporation Lunar Lake NPU (rev 04)then the NPU_PATH is:

NPU_PATH="/sys/devices/pci0000:00/0000:00:0b.0/npu_busy_time_us" -

Choose a CV pipeline from the Retail Use Cases Repo, Automated Self-Checkout or Loss Prevention and note the file paths to the docker compose files.

-

Run the benchmarking script using the docker compose file(s) as inputs to the script (sample command shown below).

Automated Self-Checkout:

python benchmark.py --compose_file ../../src/docker-compose.yml --pipeline 1Retail Use Cases:

python benchmark.py --compose_file ../../use-cases/gst_capi/add_camera-simulator.yml --compose_file ../../use-cases/gst_capi/add_gst_capi_yolov5_ensemble.yml

Go to Arguments to understand how to customize the benchmarks

Specific number of pipelines with single container

Specific number of pipelines with OVMS and Client

Benchmark Stream Density for CV Pipelines

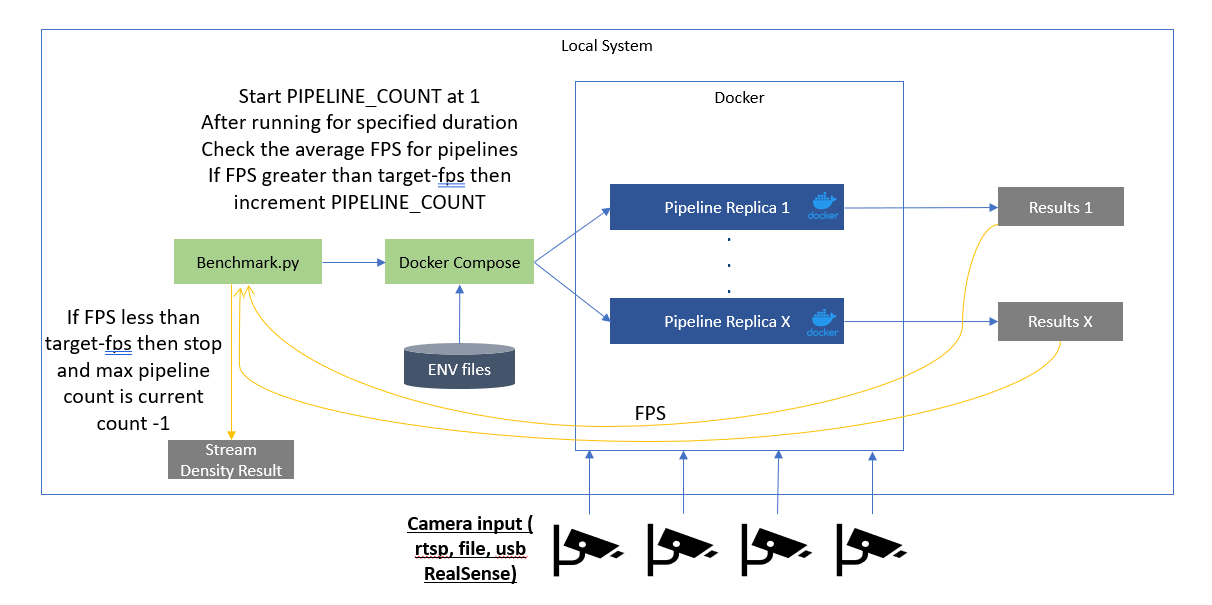

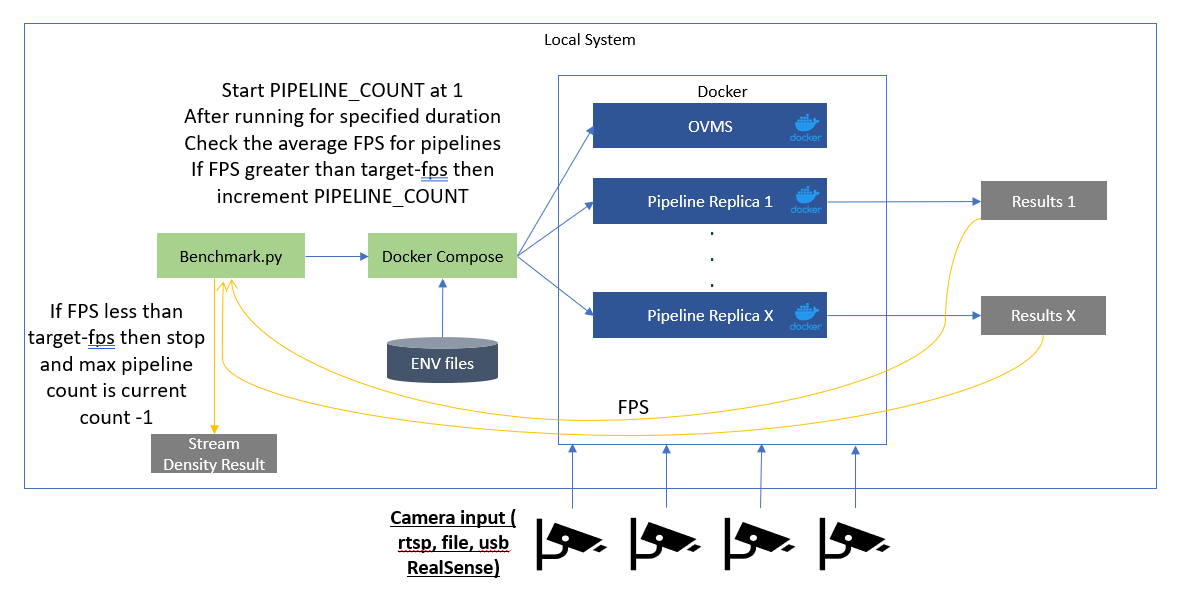

Benchmarking a pipeline can also discover the maximum number of workloads or streams that can be run in parallel for a given target FPS. This information is useful to determine the hardware required to achieve the desired performance for CV pipelines.

To run the stream density functionality use --target_fps and/or --density_increment as inputs to the benchmark.py script:

python benchmark.py --retail_use_case_root ../../retail-use-cases --target_fps 14.95 --density_increment 1 --init_duration 40 --compose_file ../../retail-use-cases/use-cases/grpc_python/docker-compose_grpc_python.yml

where the parameters:

target_fpsis the given target frames per second (fps) to achieve for maximum number of pipelinesdensity_incrementis to configure the benchmark logic to increase the number of pipelines each time while trying to find out the maximum number of pipelines before reaching the given target fps.-

init_durationis the initial duration period in second before pipeline performance metrics are takenNote

It is recommended to set --target_fps to a value lesser than your target FPS to account for real world variances in hardware readings.

Stream density with single container

Stream density with OVMS and Client

Consolidate results

The consolidate_multiple_run_of_metrics.py script processes and consolidates performance metrics from various log files (JSON, CSV, and text-based logs) into a structured report. It extracts key performance indicators (KPIs) such as CPU & GPU utilization, memory bandwidth, disk I/O, power consumption, and FPS from multiple sources, aggregates the data, and outputs a summary file.

on peformance-tools/benchmark-scripts:

make consolidate

The summary.csv content should look like this:

Camera_20250303214521714278352 FPS,14.86265306122449

Camera_20250303214521714278352 Last log update,03/03/2025 14:46:263943

CPU Utilization %,10.069166666666668

Memory Utilization %,19.70717535119376

Disk Read MB/s,0.0

Disk Write MB/s,0.002814426229508197

S0 Memory Bandwidth Usage MB/s,8012.58064516129

S0 Power Draw W,19.159666666666666

Plot Utilization Graphs

After running a benchmark, you can generate a consolidated CPU, NPU, and GPU usage graph based on the collected logs using:

on peformance-tools/benchmark-scripts:

make plot

plot_metrics.png) under the results directory, showing:

🧠 CPU Usage Over Time

⚙️ NPU Utilization Over Time

🎮 GPU Usage Over Time for each device found

Modifying Additional Benchmarking Variables

Arguments

| Argument | Type | Default Value | Description |

|---|---|---|---|

--pipelines |

int |

1 |

Number of pipelines |

--target_fps |

float (list) |

None |

Stream density target FPS; can take multiple values for multiple pipelines with 1-to-1 mapping via --container_names |

--container_names |

str (list) |

None |

Container names for stream density target; used together with --target_fps for 1-to-1 mapping |

--density_increment |

int |

None |

Pipeline increment number for stream density; dynamically adjusted if not specified |

--results_dir |

str |

'./results' |

Full path to the directory for logs and results |

--duration |

int |

30 |

Time in seconds, not needed when --target_fps is specified |

--init_duration |

int |

20 |

Initial time in seconds before starting metric data collection |

--target_device |

str |

'CPU' |

Desired running platform [cpu, core, xeon, dgpu.x] |

--compose_file |

str (list) |

None |

Path(s) to Docker Compose files; can be used multiple times |

--retail_use_case_root |

str |

'../../' |

Full path to the retail-use-cases repo root |

--docker_log |

str |

None |

Docker container name to get logs from and save to a file |

--parser_script |

str |

'./parse_csv_to_json.py' |

Full path to the parsing script to obtain FPS |

--parser_args |

str |

"-k device -k igt" |

Arguments to pass to the parser script; pass args with spaces in quotes: "args with spaces" |

Change Power Profile

- For Ubuntu, follow this documentation to change the power profile.

- For Windows, follow this documentation to change the power mode.

Change or Customize Metric Parsing

Two arguments --parser_script and --parser_args control the script and arguments passed to it respectively from the benchmark script.

- The

--parser_scriptcan be a python script that takes at least an input argument of-d <results_dir>. This will automatically get passed to the parsing script from the benchmarking script. - Any other arguments may be passed using the

--parser_args, where arguments with spaces are specified in double quotes.

Developer Resources

Python Testing

To run the unit tests for the performance tools:

cd benchmark-scripts

make python-test

To run the unit tests and determine the coverage:

cd benchmark-scripts

make python-coverage